Feedbacks for Proposal

Firstly, the research proposal was too generic as it only covered the surface of the topics that was brought up. The reserach objective was rather vague as well to what exactly was the research going to convey to the readers about. Therefore, those are areas of concerns that are required to look into. There were some acclaims on certain topics which was not cited as evidence to substantiate it and hence making it sound as an baseless assumption.

Realigning the Experiments

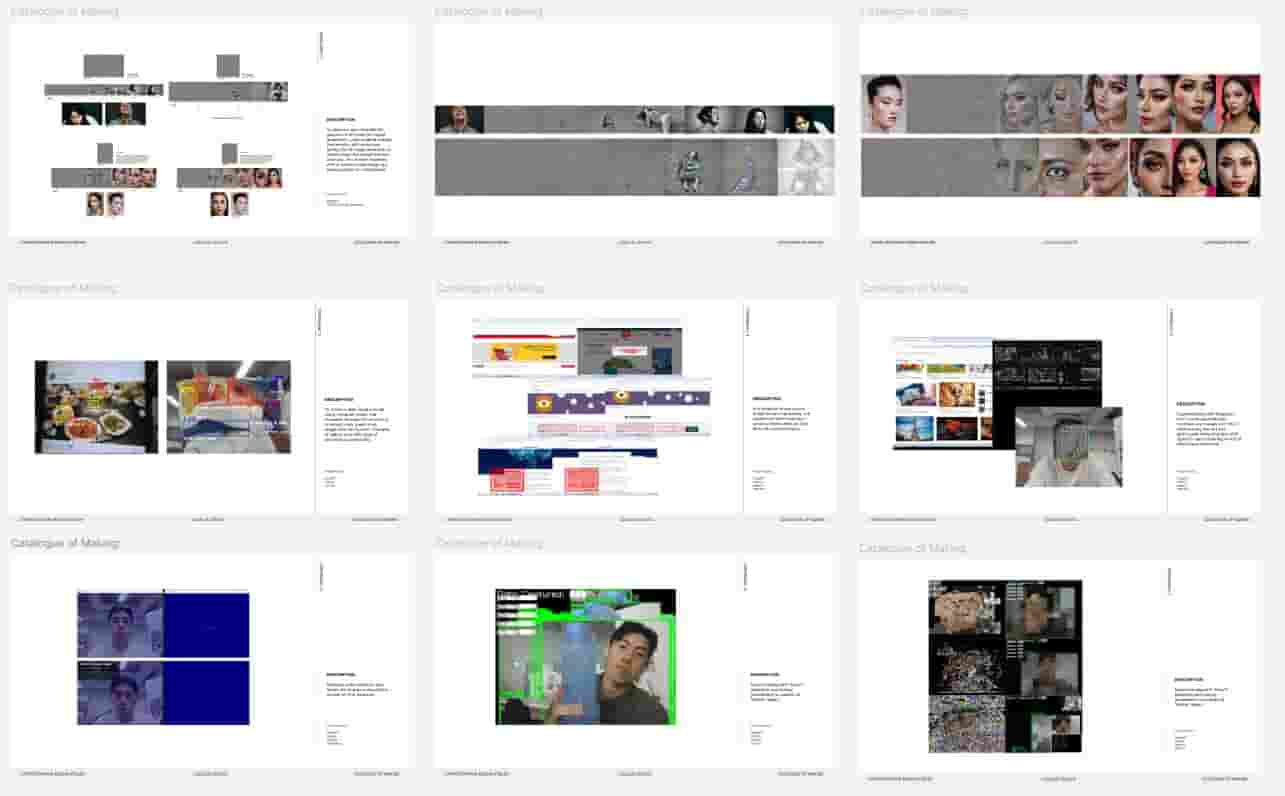

As of current, the experiments that I had were somewhat different but shared a similar interest in looking at data as commodity but less on the effects of AI. Therefore, before I move on with the dissertation, I created the list of experiments with the different softwares and images included to have a clearer look as a whole.

Thoughts

Even after listing all the experiments out, I still had a hard time linking them to the current proposal that I had because of how generic my topic was, hence a more thorough research to define the issue that I'm looking at will be more helpful to narrow down the scope and find relations to the experiments I've done.

In search of Issue

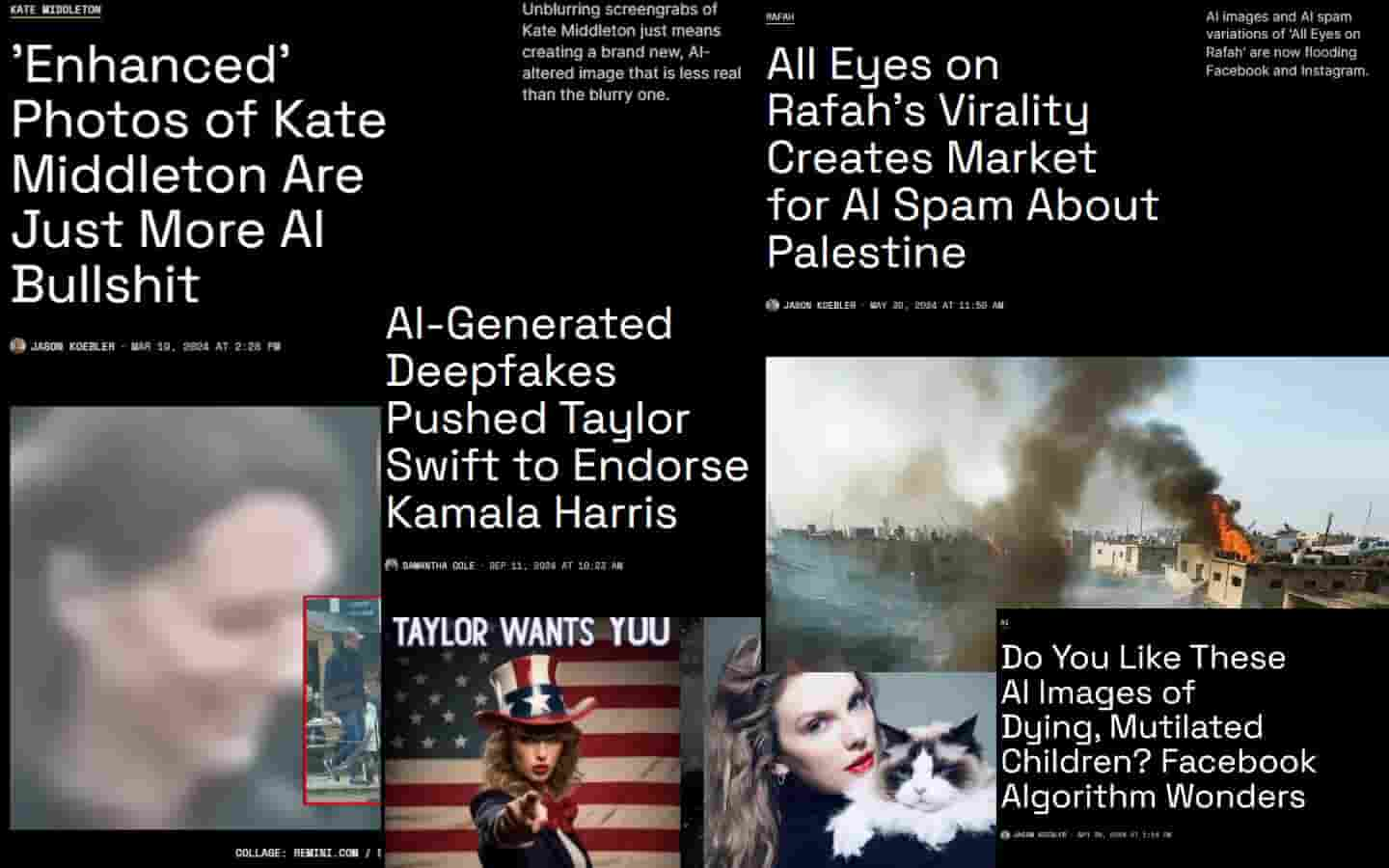

After being introduced to 404 podcast and Kate Crawford who researches in AI, I spent quite a few days reading the different articles and research papers that could better narrow down the issue that I'm targetting. I tried looking at existing articles as well and something prominent stood out to me was that AI generated content seems to be highlighted as an issue.

The last paragraph in of article really resonated to the topic that I wanted convey which was about the commodity of such AI tools and slowly it just saturates the digital space with all the AI generated content. The reason why I wanted to raise awareness was due to the fact that these AI are trained using data and some of these data are collected without conscensus by applications that we used.

Refining Experiment

In reflecting on my previous experiment, where I attempted to create a real-time diffusion visualizer using computer vision, I recognized an opportunity to refine and enhance the visual output. To push the boundaries of the visuals further, I introduced a new functionality: object detection with edge highlighting, a process known as segmentation. This was implemented using one of the pre-trained models available in YOLOv11.

After multiple iterations and experiments with ChatGPT, I successfully developed a basic detection and masking program. In this program, once an object is detected, the reconstruction window is overlaid with a skin-tone color and a mosaic filter. This approach was an attempt to simulate the appearance of censored nudity within the reconstructed visuals, exploring how such representations might provoke thought about privacy, surveillance, and the interpretation of visual data.

However due to time constraints, I decided to put this on hold for now as I will need to have a purpose to value add to the incoming prototype which will be the main focus.