DESIGN OPPORTUNITIES

As as designer how can I convey the message differently from the field of experts? I went back to one of the "what if" narrative that I had on the objectifying human parts with generated images. Therefore, I created a quick mockup for a website that sells your body parts(images). The website will consist of trending body parts that could be used for their AI-models. In addition, there are "news" on AI-developments that people can tune in to.However, I felt that it was a rather straightforward approach which still lacked the impact to raise awareness.

HAPPY ACCIDENT

So then I was trying to see things differently and while I was tampering with computer vision again, I realised one of detection methods was through edge detection, by turning the visuals into black and high while having a high contrast, it highlights the edges. Therefore, I was thinking how perhaps detection works for AI-detection tools and similarly they are trained on the data that was fed to them to detect but that was not I seeking for.

I was thinking of ways that does not involve the training of AI models as I mentioned in how there will be oversaturation of AI generated content, which corrupts data that are deemed as "higher quality". I was thinking of how we could detect things manually with minimal involvement with advance technology capability to detect. Hence, I was then inspired by the idea how under certain conditions, we are able to spot things better. Such as a infrared, detecting heat, which lead me to the idea of identifying AI-images through a certain simple filter that brings out the imperfections or known as the "artifacts" on these images.

PHOTOSHOP'S BLENDING LAYER EFFECT

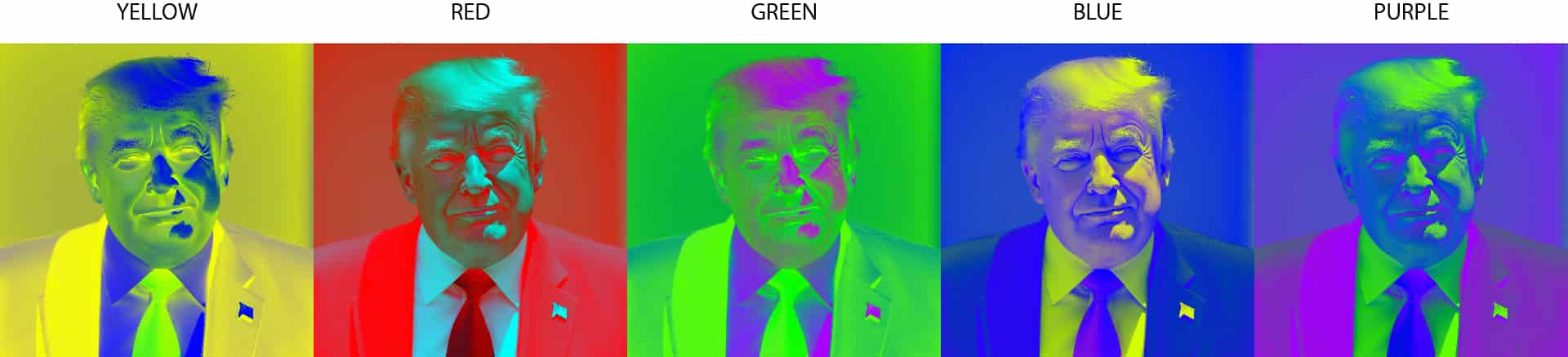

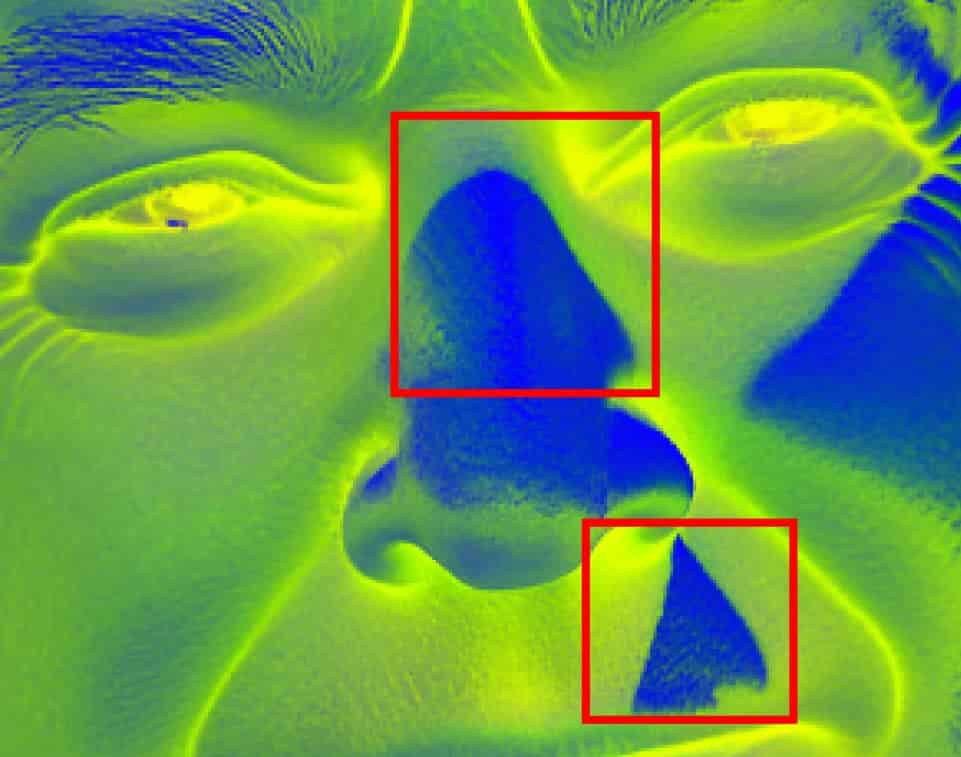

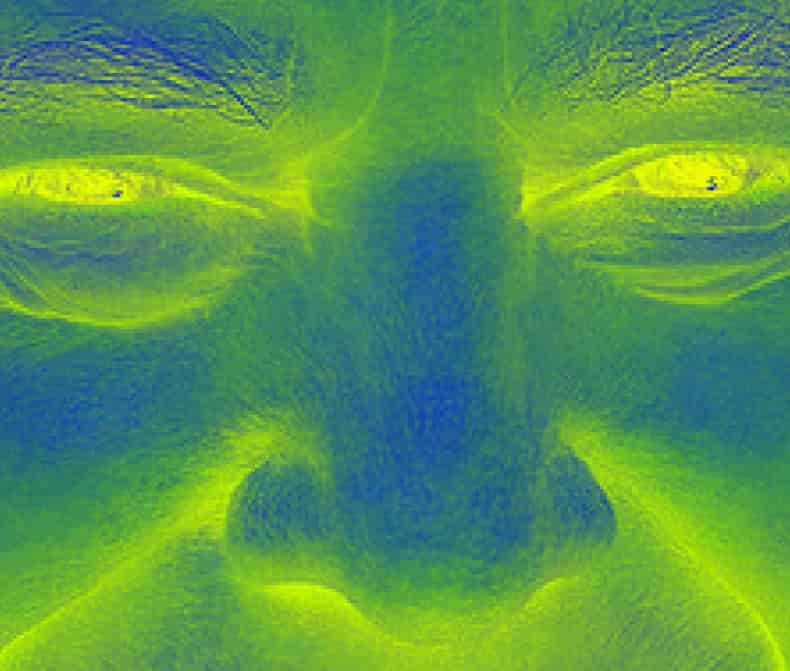

With the idea of introducing certain filters, I remembered Photoshop having the different blending effects for layers, which made me curious how it could bring out the imperfections of AI-generated images. I tried experimenting the different effects and to find that using the difference effect might be the most effective. However, the choice of color chosed for the difference blending was rather experimental as compared to the other colors. The choice of color was yellow and compared to the other colors for the blending effect, it was more prominent for me to spot the artifacts on AI-generated images. However, I believe there's a need to look into this matter more.

By using this blending effect, it highlights the weird texture in AI-generated images in comparison to a original image applied with the same blending effect. In the following example, it's a comparison between an generated image and orginal photo, the aim was to highlight the irregular lighting, skin texture and other irregular deformation in the generated image. The image generation method remains the same with the use of Flux.

From the images above, it highlighted how unnatural the lighting is with how crisps the edges are in. On top of that, the skin texture was definitely different from a real human skin texture in comparison to the image on the right. However, some may argue that the images are of the different lighting environment and the skin texture might be affected from the resolution of the image. Hence, I will be experimenting under a more structured flow.

Through this experiment, it also brings the question of how this filter can be used as a tool, do I make a handheld scanner or do I make something related to web-based for the ease of accessibility. Therefore, I was reminded how I could utilize the computer vision as the input for the filter to work on real-time detection. However, more exploration will be required to see how it can be used in a speculated future.