WEB-BASED SCANNER

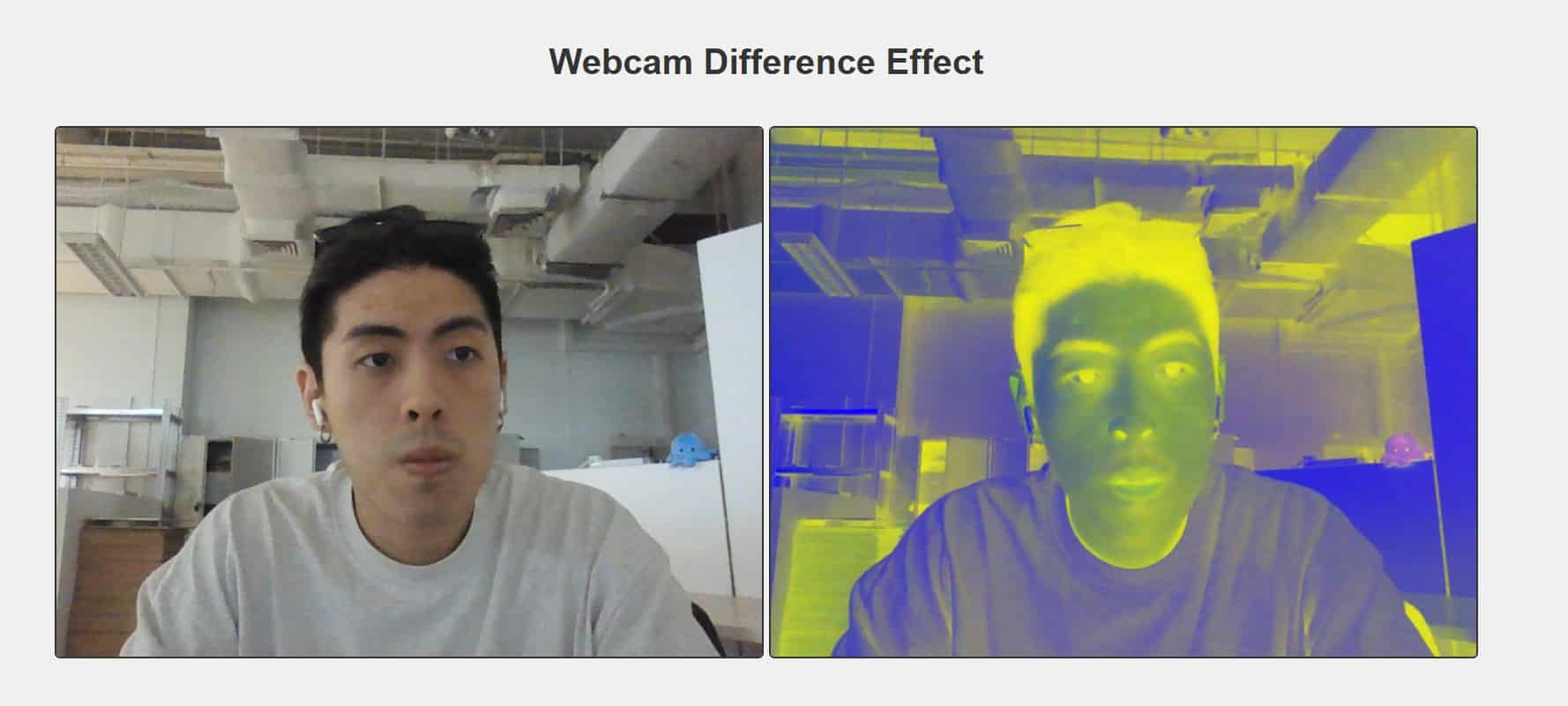

As suggested from the previous week to relook into the web-based scanner, I came across Grok 3, another AI chatbot that rivals ChatGPT and in the previous experiment, I tried getting help from ChatGPT to help me which led to a failed attempt. This time, I provided Grok 3 on the functions that I want to incoporate to the the system and how it functions, it managed to resolve the issue that I was facing on the difference in the scanner effect on web browser. Like I was capable to get the code working on python but when it loads on web, it failed to load the effect that I intended to have. Although it runs at a very slow frame rate, it still works the way I wanted and I just have to refine how it can be used on mobile as well, which may encounter some issue as it requires a lot memory to run it.

Therefore, to prevent the issue of mobile devices, I still have to work on the physical scanner with ESP32-CAM to prevent such issues and providing an offline method for users to use. However, I intend to see if I could use the existing code and integrate it to ESP32-CAM. Hence, this can act as an alternative method for users to use other the physical scanner.

SIDE TRACKING

I was trying catch up the new developments in AI, but instead I came across a report on Pimeyes, which had a bit of controversy with it's service. So I came across the news of how Pimeyes is a double-edged sword. Despite being an face detection tool that could help people identify non-consensual use of their face, with their trained-data, people highlighted how their service allows potential stalking through their service where it notfies the users on whoever they are tracking posts a new photo online. It questions their process in getting those images when doing the search. Thus, it would best rely on methods that does not involve machine learning and hence the suggested method with the difference blending filter.

EXISTING CHATBOTS

As I do a daily catch up on the latest AI news, I was introduced to the conversational bot by Sesame AI, which was really impressive with the way it mimics human speech. Despite having the limitations to just chat with the bots for 30mins per call, I spent at least an hour, talking to this latest AI-conversation model, it has the most human sounding voice with the “erms, uhs, etc” , well not all the time it generates perfect pauses, it can detect a level sarcasm, it unable to detect how loud or soft I am. I even tried to role play a situation where I’m in danger and need it to speak softly without exposing my location. It was unable to do that, as it proceeded with its usual voice level.

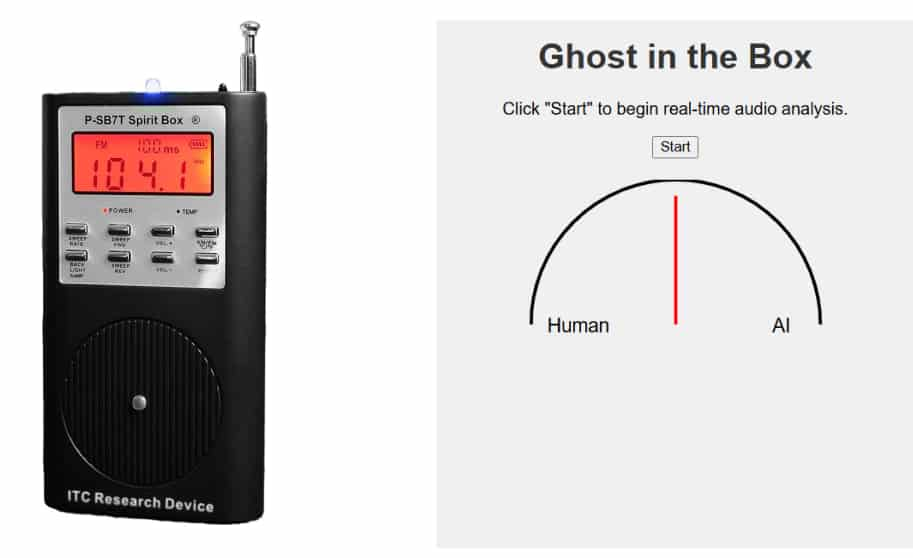

SPIRIT-BOX INSPIRATIONS

AUsing the primitive way of frequency analysis, the system captures data every 2s, by using a generic set of data for human voice, anything beyond the frequency set for human is deemed AI-generated. So in a way it works like a very wonky spirit box that scans radio channel quickly. With the use of ZCR, a method used to measure frequency. By measuring frequency, it helps to detect the difference in speech between AI and Human. However not at very complex level as it exist as a gauge for people to make their own judgment. However I do have intentions to bring this concept into tangible output rather than just web-based.

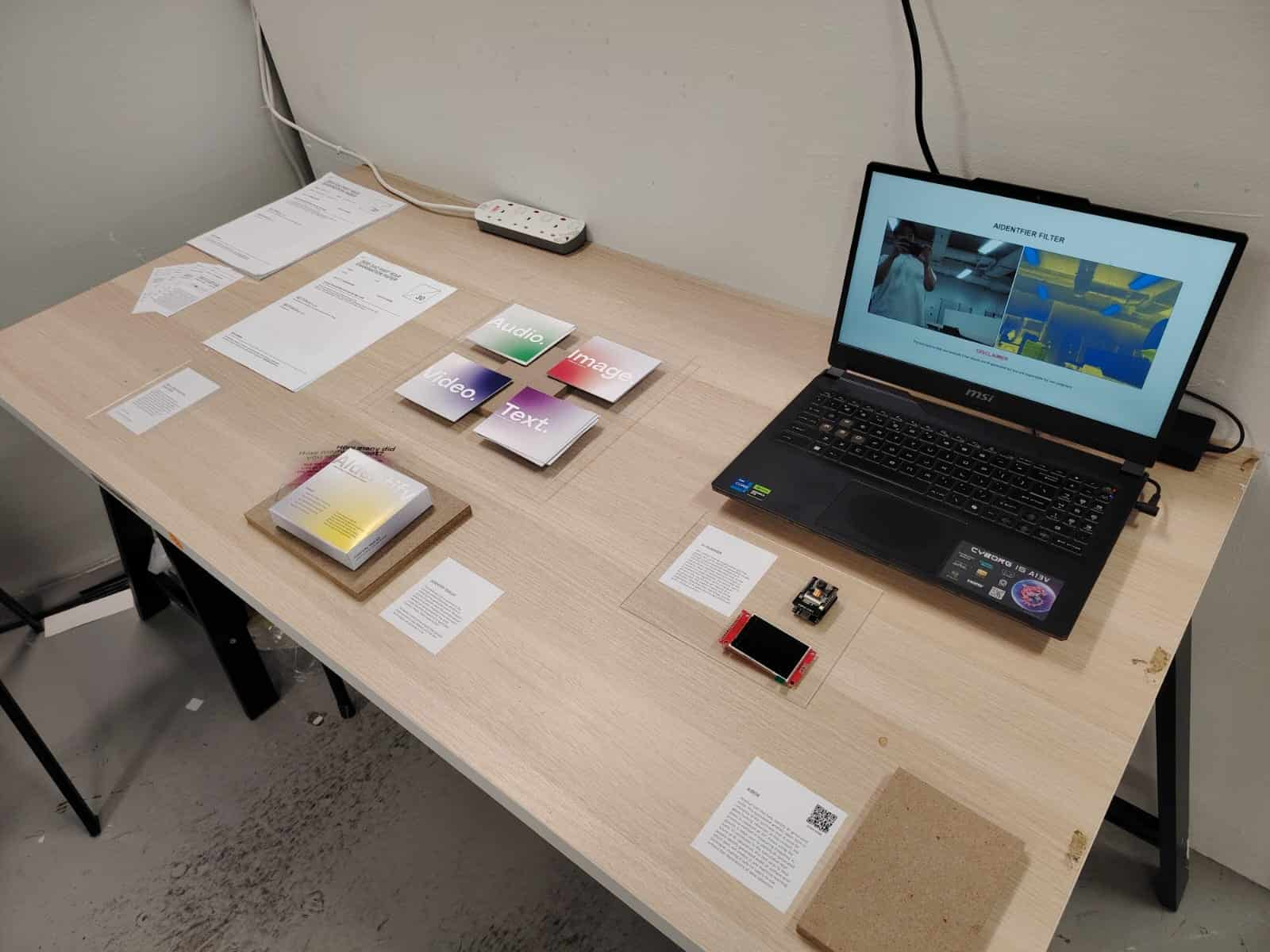

TABLE SET-UP UPDATE

To end off the week, the class was tasked to setup each of our tables with our current progress of works. Hence, for most of the items I have completed the function of the different artifacts but I still need to work on the visuals.